If you randomly position your tongue, would you be able to guess which vowel will be pronounced before making a sound? The answer is yes according to the results produced by a multidisciplinary team of experts in Grenoble (Gipsa-lab* and LPNC**) and two Canadian teams, including Professor David Ostry’s team at McGill University in Montreal. Despite their rather logical outcome, the findings were apparently not so obvious at the start of the project.

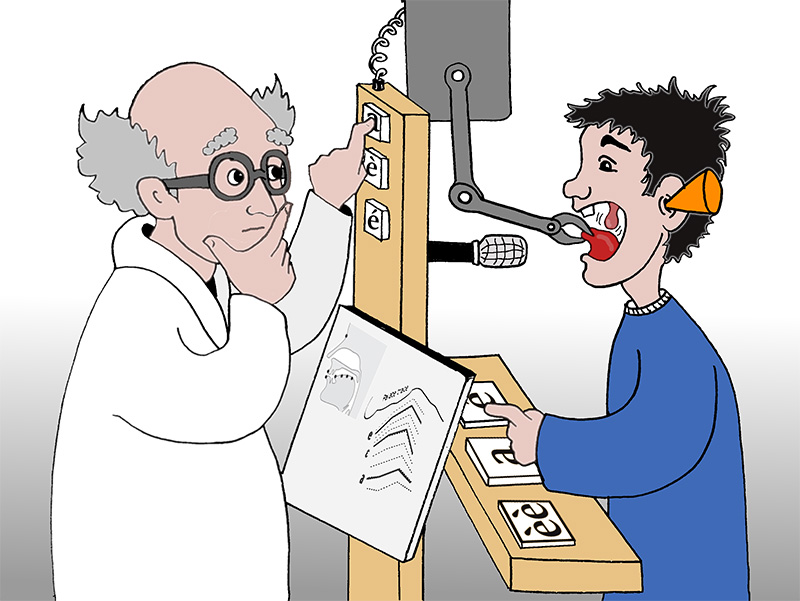

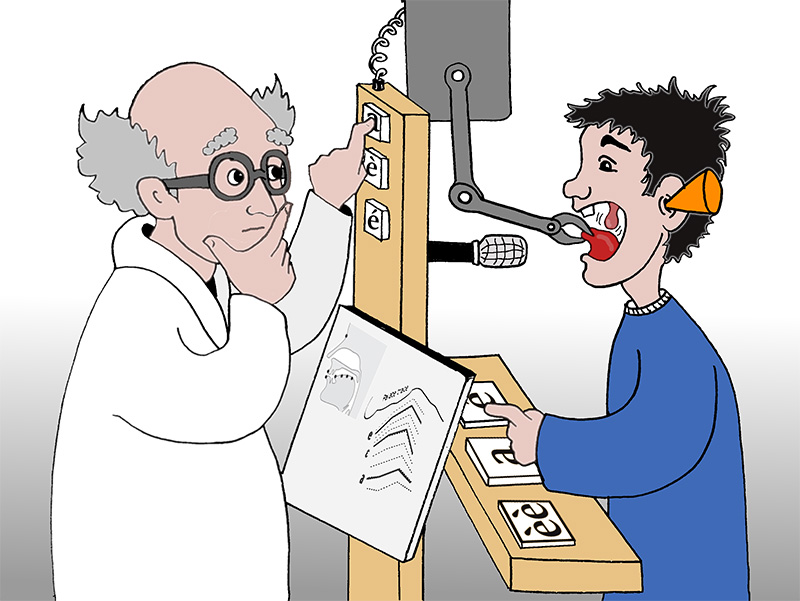

To reach this conclusion, the researchers used a clever experimental protocol. “We placed small sensors around the tongues of volunteer participants. We then asked them to move their tongues to certain positions using a visual feedback system that enabled them to point their tongue at a specific target much like a joystick,” explains Jean-luc Schwartz, CNRS research director at Gipsa-lab and co-author of the research published in PNAS***. “Once participants were able to position their tongue as required, they were asked to guess which vowel would be produced by their tongue shape without making any noise. In general, they were able to predict the right vowel just as efficiently as if they had heard the vowel pronounced.”

In order to test somatosensory feedback without the aid of spoken sounds, researchers could have used a device to hold and move the participant’s tongue. To avoid this uncomfortable approach, they had to create a sophisticated experimental protocol that was much more comfortable for participants.

Illustration: Sandra Reinhard©

Each tongue position matches a sound

These research results indicate that our brain is able to collect and analyze somatosensory data based on the shape of a tongue and a related language. “When we speak, we not only produce sound, but also create a specific tongue shape that impacts the shape of our vocal track and this is what creates characteristic sounds useful for spoken language,” adds Pascal Perrier, a professor at Grenoble INP, a researcher at Gipsa-lab and co-author of the PNAS publication. “Thus, when we speak we also create somatosensory feedback that is part of fundamental information, in addition to sound, that enables us to control the quality and accuracy of speech.”

While these findings are of great interest in theoretical terms, they also open the door to interesting possibilities in terms of rehabilitation for children suffering from speech disorders or adults who partially lose their hearing. For children, learning is so efficient that somatosensory feedback could enable them to grasp accurate speech and tongue movements without having perfect auditory feedback.

Improving artificial language models

In more theoretical terms, this new knowledge will enable researchers to further develop models for speech production. It will help researchers pilot complexe physical, biomechanical and acoustic models for speech simulation. With these models, it is possible to simulate the creation of sounds by controlling specific tongue muscles.

A simplified biomechanical probabilistic model that simulates the effect of primary tongue muscles on the vocal tract was developed by Jean-François Patri, leading author of the PNAS publication and a doctoral graduate of the Gipsa-lab (under the direction of Pascal Perrier and Julien Diard, CNRS head of research at LPNC and also co-author of the publication).

This probabilistic model could be inserted in artificial intelligence applications in order to efficiently integrate muscular simulation, tongue shapes and sounds for machine learning. This research work is being developed under the framework of the Bayesian Cognition and Machine Learning for Speech Communication Chair led by Pascal Perrier at the MIAI Grenoble Alps Institute. A major goal for the chair is to generate machine speech using artificial intelligence tools that would simulate human speech patterns in humanoid robots.

* GIPSA-lab, Grenoble Images Parole Signal Automatique, CNRS, Grenoble INP, UGA

** LPNC, Laboratoire de Psychologie et NeuroCognition, CNRS, UGA

*** Patri, J.F., Ostry, D.J., , Diard, J., Schwartz, J.L., Trudeau-Fisette, P., Savariaux, C., & Perrier, P. Speakers are able to categorize vowels based on tongue somatosensation. Proceedings of the National Academy of Sciences, in press.